Artificial Intelligence (AI) is transforming industries, but as its influence grows, so does the need to address ethical considerations, particularly AI fairness. In this post, we'll delve deep into the concept of AI fairness, drawing on expert insights and opinion pieces to provide a detailed and comprehensive understanding.

What is AI Fairness?

AI fairness refers to the equitable treatment of individuals by AI systems, ensuring that these systems do not perpetuate or exacerbate biases. It involves the mitigation of any bias that may lead to unfair treatment based on factors like race, gender, or socio-economic status.

Why AI Fairness Matters

- Societal Impact: AI systems influence critical sectors such as healthcare, finance, and criminal justice. Ensuring fairness is crucial to prevent systemic discrimination.

- Trust and Adoption: Fair AI systems are more likely to be trusted and widely adopted by users.

- Legal and Ethical Obligations: Many regions are implementing regulations enforcing fairness in AI, making it a legal requirement in addition to an ethical one.

Expert Insights on AI Fairness

Dr. Timnit Gebru on Bias in AI

Dr. Timnit Gebru, an advocate for diversity in AI research, emphasizes the importance of addressing bias present in datasets. She argues that biased datasets lead to biased algorithms, which can result in discriminatory outcomes.

"The datasets used to train AI models must reflect the diversity of the population they represent. Without it, we're perpetuating existing biases." — Dr. Timnit Gebru

Prof. Kate Crawford on Ethical AI

Prof. Kate Crawford highlights the need for transparency and accountability in AI systems. She advocates for the inclusion of diverse voices in AI development to ensure a variety of perspectives are considered.

"AI ethics isn't just about algorithms; it's about including voices that reflect the diversity of the world." — Prof. Kate Crawford

Opinion Pieces: Diverse Perspectives

Perspectives from Industry Leaders

Several industry leaders, like Sundar Pichai of Google, have spoken out about their commitment to AI fairness. Initiatives like diversity training for AI teams and fairness audits are being implemented to address these issues.

Community Voices

Community-driven opinion pieces underline the real-world implications of unfair AI. These pieces often provide narratives where AI's biases have impacted individuals negatively, reinforcing the urgent need for fairness.

"The stories we hear from communities affected by AI bias emphasize the importance of ethical AI implementation." — Anonymous Community Contributor

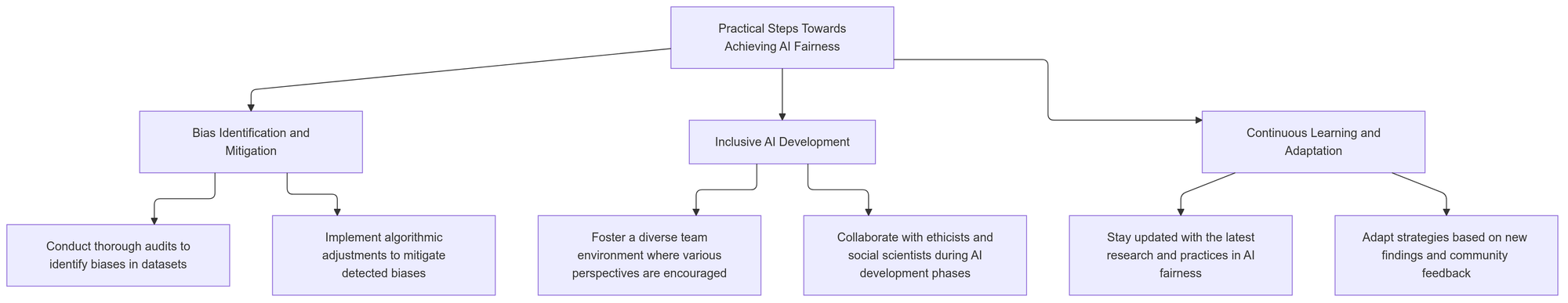

Practical Steps Towards Achieving AI Fairness

- Bias Identification and Mitigation:

- Conduct thorough audits to identify biases in datasets.

- Implement algorithmic adjustments to mitigate detected biases.

- Inclusive AI Development:

- Foster a diverse team environment where various perspectives are encouraged.

- Collaborate with ethicists and social scientists during AI development phases.

- Continuous Learning and Adaptation:

- Stay updated with the latest research and practices in AI fairness.

- Adapt strategies based on new findings and community feedback.

Conclusion: The Path Forward

Achieving AI fairness is a complex but crucial objective. By drawing on expert insights and community-driven perspectives, we can create AI systems that are equitable and trustworthy. The journey requires constant vigilance, adaptation, and a commitment to ethical standards.

As AI continues to evolve, so must our efforts to ensure fairness, making it an integral part of the development process.

Engage with the conversation: Share your thoughts and experiences with AI fairness in the comments below!