Artificial Intelligence (AI) has revolutionized various industries, offering unprecedented benefits in efficiency, data processing, and decision-making. However, its misuse in fraudulent activities has raised significant concerns across the globe. In this comprehensive post, we'll explore how AI is misused in fraud, delve into expert opinions, and discuss strategies to prevent such activities effectively.

Understanding AI-Driven Fraud

AI technologies, particularly machine learning and deep learning, can be exploited to conduct sophisticated fraud. These include generating deepfakes, automating phishing attacks, and identity theft. The implications are vast and can lead to significant financial losses and reputational damage.

Common Types of AI-Facilitated Fraud

- Deepfakes

- Deepfakes involve AI-generated audio and video content that looks or sounds authentic. Criminals use them for extortion, misinformation, and impersonation.

Deepfake technology showcasing the potential for realistic audio-visual manipulation.

- Automated Phishing

- AI can automate phishing attacks by generating personalized and convincing messages at scale, making it challenging for individuals to discern fraudulent communications.

Caption: How AI tools enhance phishing attacks by personalizing fraudulent emails.

- Identity Theft

- AI can assist in quickly analyzing and impersonating individuals based on data breaches, leading to unauthorized transactions and account access.

Expert Insights on AI and Fraud

To gain a deeper understanding, let's look at some expert opinions:

"The sophistication of AI technologies has, unfortunately, been mirrored in the sophistication of fraudulent activities. Vigilance and robust AI ethics are needed now more than ever."

— Dr. Emily Zhao, Cybersecurity Expert

"AI's ability to analyze vast data sets is incredible, but it becomes a double-edged sword when used unethically. Collaboration between technology providers and regulators is crucial to curb AI-enabled fraud."

— John Smith, AI Ethics Researcher

Prevention Strategies

Preventing AI-driven fraud requires a multi-layered approach involving technology, regulation, and education. Here are some strategies recommended by experts:

1. Advanced AI Detection Tools

Deploy AI to counteract fraudulent activities by detecting anomalies in behavior and transactions. Machine learning algorithms can learn from vast datasets to identify patterns indicative of fraud.

2. User Education and Awareness

Educating users about the potential threats and indicators of AI-driven fraud is a critical step in prevention. Awareness programs should cover recognizing phishing attempts, securing personal data, and understanding deepfakes.

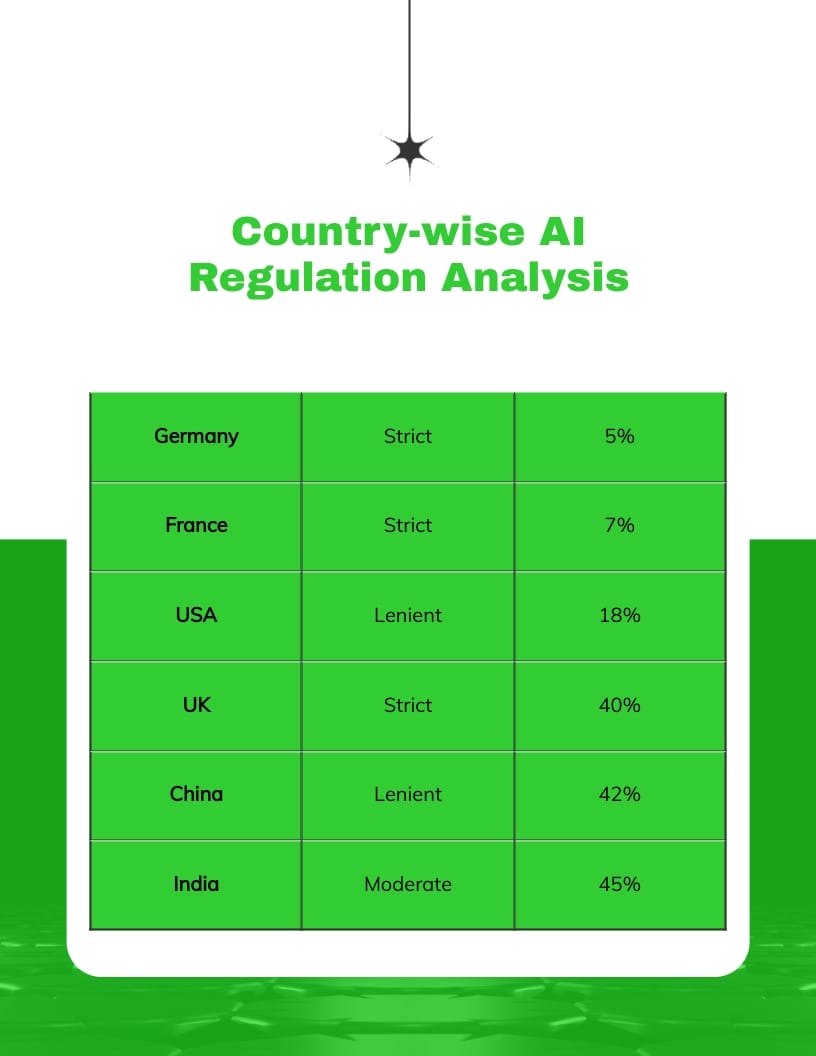

3. Regulatory Measures

Governments and regulatory bodies need to craft policies that address AI misuse. This includes setting standards for AI transparency and accountability, and imposing penalties for violations.

4. Cross-Industry Collaboration

Collaboration between financial institutions, tech companies, and cybersecurity experts is vital for sharing insights and developing comprehensive anti-fraud technologies and practices.

Tip: Stay updated on emerging AI tools that contribute to security solutions as well as those that could be used for malicious activities.

5. Ethical AI Development

Encourage ethical AI development by integrating security measures from inception. Transparency in AI processes and regular auditing can help ensure technology is not used unethically.

Conclusion

While AI significantly benefits society, its potential misuse cannot be ignored. By employing robust prevention strategies, enhancing awareness, and fostering collaboration among industries and regulators, we can mitigate AI's risks in fraudulent activities. Continuous vigilance and adaptation are essential as technology evolves to ensure AI remains a force for good.

Remember to engage with the community by sharing your thoughts on AI fraud and prevention strategies in the comments below. Your insights could help shape robust defenses against these growing threats.